Authors:

(1) PIOTR MIROWSKI and KORY W. MATHEWSON, DeepMind, United Kingdom and Both authors contributed equally to this research;

(2) JAYLEN PITTMAN, Stanford University, USA and Work done while at DeepMind;

(3) RICHARD EVANS, DeepMind, United Kingdom.

Table of Links

Storytelling, The Shape of Stories, and Log Lines

The Use of Large Language Models for Creative Text Generation

Evaluating Text Generated by Large Language Models

Conclusions, Acknowledgements, and References

A. RELATED WORK ON AUTOMATED STORY GENERATION AND CONTROLLABLE STORY GENERATION

B. ADDITIONAL DISCUSSION FROM PLAYS BY BOTS CREATIVE TEAM

C. DETAILS OF QUANTITATIVE OBSERVATIONS

E. FULL PROMPT PREFIXES FOR DRAMATRON

F. RAW OUTPUT GENERATED BY DRAMATRON

D SUPPLEMENTARY FIGURES

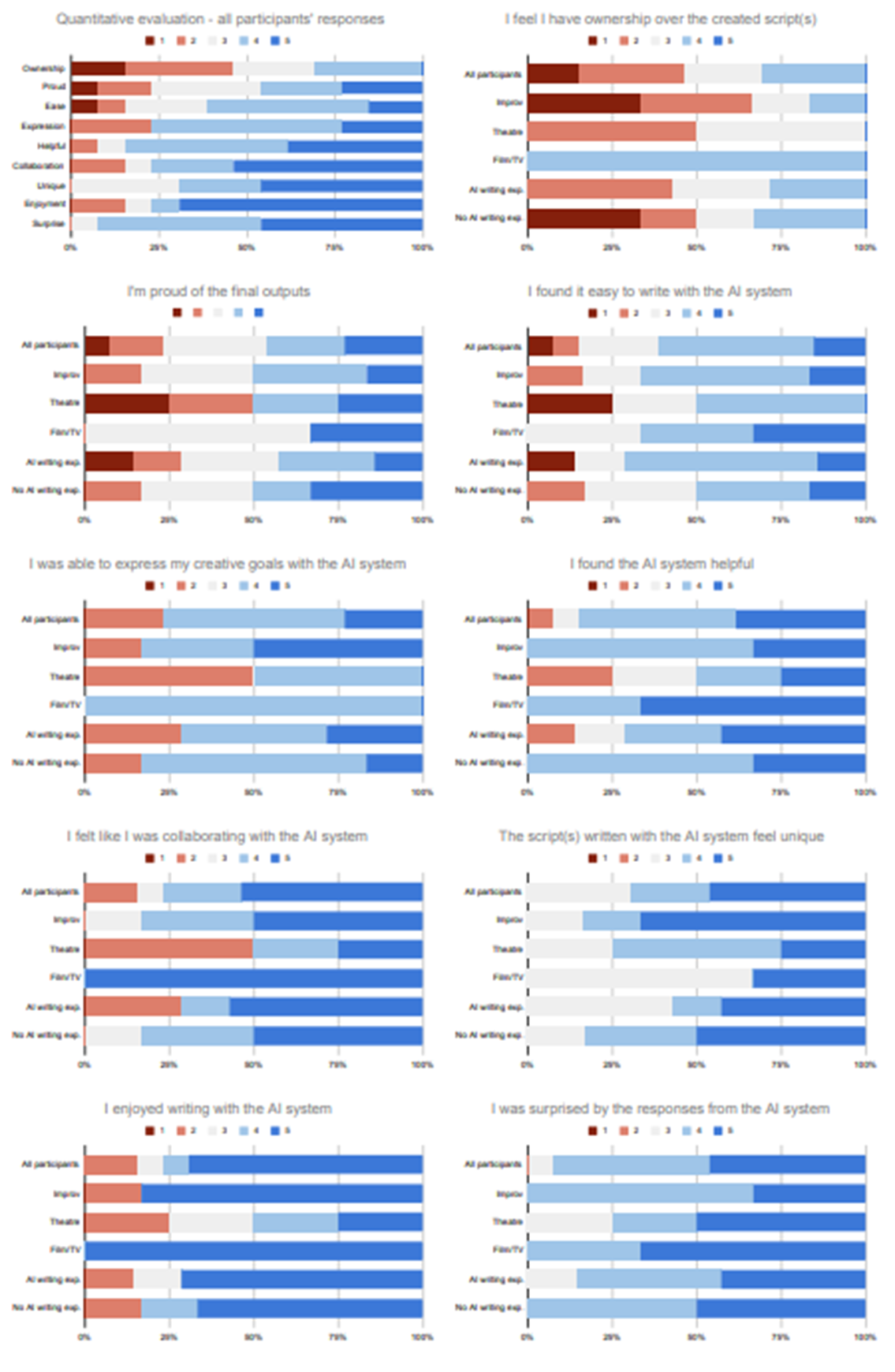

Figure 7 shows the participants’ responses to the quantitative evaluation, on a Likert-type scale ranging from 1 (strongly disagree) to 5 (strongly agree), and broken down by groups of participants. For the first group, we defined a binary indicator variable (Has experience of AI writing tools). For the second group, we defined a three-class category for their primary domain of expertise (Improvisation, Scripted Theatre and Film or TV).

This paper is available on arxiv under CC 4.0 license.